Data Management Services

Our proven Seven Streams Reference Framework for Data Management, along with the DW2.0 Reference Architecture, strikes a balance between the long term architectural view and the real world need for quick effective solutions. This approach drives capability maturity improvement across the data management function providing immediate and sustainable value.

We offer a suite of services, Methodologies, Play Books, Jump Start Process Guides and Templates, together with our proven Spiral Methodology oriented project planning template, to accelerate development of mature and sustainable enterprise-class Data Warehouse, Business Intelligence and Big Data Analytic Solutions.

[accordions collapsible=true][accordion title=”Business Discovery Engagement”]

The focus here is on identifying the “burning questions” which, if answered, would mean decisions could be made; which in turn would lead to a major difference to the company’s bottom-line. These burning questions fall into 2 major categories: daily operational-type decision support and strategic longer-term decision support based on analysis of trends and patterns over time. Once the questions have been identified, they are prioritized and broken into logical phases for implementation in the warehouse.

We leverage our proven Joint Requirements Planning (JRP) service to expedite discovery of these key scoping insights. Following these JRP sessions, data requirements are defined, i.e. the data needed in order to answer the burning questions is specified and pertinent business definitions and rules (“metadata”) are agreed upon. Also, sources of the data are listed and a preliminary list of Data Ownership and Data Stewardship roles are defined and agreed upon. A plan is drawn up, specifying the steps necessary in order to develop the Data Warehouse as scoped in this work segment and this is included in the Scope Document, which summarizes the major deliverables of this segment.

Several JRP workshops are typically held to address the following topics:

- Data Warehouse Project Initiation

- Project Planning

- Develop a High-Level Subject Area Diagram

- Logical Data Model For the First Data Warehouse Project

- Data Warehouse Requirements Gathering

Business Outcome

This service rapidly enables the organization to accurately scope its Enterprise Data Warehouse and BI requirements and to define initiatives to address them. This provides the organization with a clear picture of what is required to satisfy its most pressing Operational and Strategic Decision Support needs.

This engagement can typically be completed in a four – six week time box, depending on the breadth of business coverage required.[/accordion][accordion title=”Enterprise Data Warehouse & BI Strategy Engagement”]

The EDW and BI environment are not static products, they are an on-going program. The EDW will continue to grow and evolve over time as your business grows and evolves, including the inclusion of new categories of data such as unstructured textual, streaming, and other types of data content. Normally, changes to the EDW will be implemented on the basis of controlled quarterly releases.

In order to sustain this amount of recurring change, it is imperative that a “big picture” exists: an overall solution architecture within which the successive releases can be built, and within which impact of change can be accurately determined. The strategy takes stock of “where we are now, compared with where we ultimately will be in the years to come”, and specifies what infrastructural changes will be necessary with each successive phase of the EDW and BI Environment. Vendors of hardware and software are brought into the loop at this stage to complete a strategic roadmap view for how the environment will evolve at the technical level.

Several JRP workshops are held to define and get agreement on the path the environment will take, e.g.:

- Data type and source categorization

- Establishment of Metadata Requirements

- End User Access Tool Requirements

- End User Data Warehouse Access Requirements and Documentation

- Data Warehouse Infrastructure Assessment

- Data Warehouse Capacity Planning

Business Outcome

This engagement results in a clear specification of strategy for supporting the EDW and BI program. This is an essential up-front step in order to ensure that the program is sustainable over a time, and in order to protect the organizations expected ROI on the program.

Duration

This engagement can usually be completed within four to six weeks.[/accordion][accordion title=”Design and Construction Engagement”]

This segment includes the Implementation and roll-out to the subject Environment. Activities include installation of hardware and software, detailed design and construction of all elements of the EDW environment including data structures and transformation mechanisms, user training, testing and validation, and final cut-over to “live” status. We also conduct a post implementation review to preserve lessons learned thereby positioning the organization for on-going success.

Business Outcome

Gavroshe assists its clients to implement and roll-out its first phase, addressing the highest priority “burning questions” as specified in the Business Discovery segment. From this base the organization’s business community can get answers to the key issues that will favorably impact organization’s revenues, expenses, and overall operational efficiency. It also provides an extensible foundational platform upon which to deploy incremental new capability.

Use of the EDW will also highlight data integrity problems in the Operational Application Systems. These areas can then be given the focused attention necessary to cleanse the data in preparation for transfer into the new Application systems envisaged. This, together with the institution of a Data Stewardship program at, will ensure that the integrity of the organization’s Data Resource is given the attention it deserves.

Another outcome of using the EDW is normally to highlight areas in the business where processes are operating inefficiently or “bottlenecking”. This provides direction for further Business Process Improvement, and also provides a mechanism for measuring the before and after situation related to a targeted Business Process, thereby providing a measure of the effectiveness of the Business Process Improvement efforts.

[/accordion][accordion title=”Metadata”]

Metadata is an integral part of the Data Warehouse environment. The demand for data warehousing and high-level decision support is increasing dramatically, especially since the reality of Big Data. Organizations are being challenged to roll out ever-increasing functionality in their data warehouses to an expanding audience of end users.

Metadata is an integral part of the Data Warehouse environment. The demand for data warehousing and high-level decision support is increasing dramatically, especially since the reality of Big Data. Organizations are being challenged to roll out ever-increasing functionality in their data warehouses to an expanding audience of end users.

Understanding the data in the warehouse, and where its content comes from once seemed a manageable job; a small handful of subject area experts could be relied on to maintain the knowledge of the data in the warehouse and its related business rules. However, as the scope of data warehouses continue to expand dramatically, that small handful of experts has grown into a crowd. This crowd of business knowledge owners requires a better, more standardized way to document and communicate their knowledge of the warehouse, its rules and its data sources.

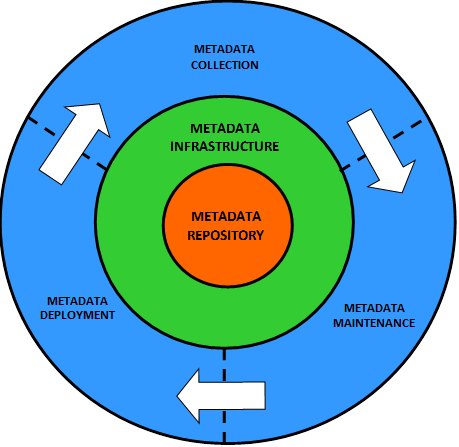

The diagram below depicts the 5 major functional areas that Gavroshe addresses as part of a successful Metadata initiative.

For more information on how Gavroshe can assist your organization in creating a sustainable and active metadata environment please contact us.

[/accordion][accordion title=”Data Quality Management”]

Data quality problems currently cost U.S. businesses in excess of US$600 billion per year, according to interviews with industry experts, customers, and survey data. Much of the data residing in legacy systems and data warehouse today is of poor quality. Any interface may potentially introduce data that is not clean, in addition, over time data may become ‘dirty’ due to faulty processes or rules/standards not being adhered to.

Quality data does not necessarily mean perfect data. It is essential to set quality expectations, as often-deliberate tradeoffs are made between speed, convenience and accuracy. For example, data may be updated weekly, and so results of a query may be some days out-of-date. Considering the new topic of unstructured and streaming data content, the challenges of optimizing the data quality management process has just gotten much larger.

For any data migration to be timely and successful it is imperative to know that the disparate data sources to be migrated, have been ‘cleaned’ and re-engineered into the format or ‘model’ expected by the target system.

Without the ‘knowledge’ that the data to be migrated is right, the migration process must take an iterative, ‘amend & load’ approach, leading to increased project time and cost. (How many loops this iterative approach will go through is a complete guess and every project underestimates the amount of time required).

By introducing a phase into the project to analyze and ‘correct’ the source data anomalies, the actual migration and implementation phases become quantifiable and accurately forecast phases of the Project Plan.

Therefore the approach to data cleansing and migration must include the following four major components.

- Analysis of the Source Data

- Definition of Data Integrity Criteria

- Data Cleansing

- Physical transformation and migration of the data to the target system

The initial phase of the Cleanse and Migration approach is the Analysis of the source data, working with both the IT and Business communities to address both data accuracy and relevance.

Data Analysis also known as Data Profiling and Mapping comes before ANY Migration Project data staging or mapping exercises. It ensures that the project starts from a known point, with a ‘clean’ data platform. This process has the essential effect of reducing project time scales and costs by removing the ‘Trial & Error’ iterative approach. In addition, it enables the organization to identify ‘weak links’ in the Data Supply Chain and provides the opportunity to implement new processes.

For the data quality improvement process to be effective, it is necessary to frame it in the context of an overall Data Governance process that involves Data Owners, Stewards, and Managers at the Enterprise/Subject Area level, and to enable it with Data Quality Dashboards, and other tools that make the process effective and efficient.

Gavroshe offers a variety of services and capabilities to address the entire Data Quality Management domain. Contact us to learn more about how our consulting services, playbooks, jumpstart templates, and overall data quality management experience can be leveraged to accelerate your organization’s journey to quality data.

[/accordion][accordion title=”Master Data Management”]

Our focus on Master Data Management or MDM comprises a strategy, policies, tools and processes to define and effectively manage organization’s master (non-transactional, non-volatile data, e.g. reference data, customer master data, product master data, etc.).

The goal of MDM is to reduce redundancy, improve quality of corporate data and establish the central authority responsible for mission-critical company data that must be shared the different lines of business within an organization. We see it as one of the foundation stones of integrated effective corporate Data Governance.

Gavroshe has experience with a number of vendor supplied MDM solutions. For more information please contact us.

[/accordion][accordion title=”Data Warehouse Solution Architecture and Delivery”]

The Gavroshe brand of services is rooted in a firm foundation of Enterprise Architecture. This enables us to take a holistic approach to Data Resource Management, Corporate Information Factory / Data Warehouse, (including the new Big Data Warehouse), Business Intelligence, Repository Management, Knowledge Co-ordination, Model Management, Business Process Re-engineering, Reverse Engineering and Re-engineering of IT Systems.

We are specialists in the methods, tools and techniques that support a pragmatic approach to EA – one which strikes a balance between a long-term architectural view on the one hand, and the very real need to have quick effective systems implementation and maintenance on the other hand.

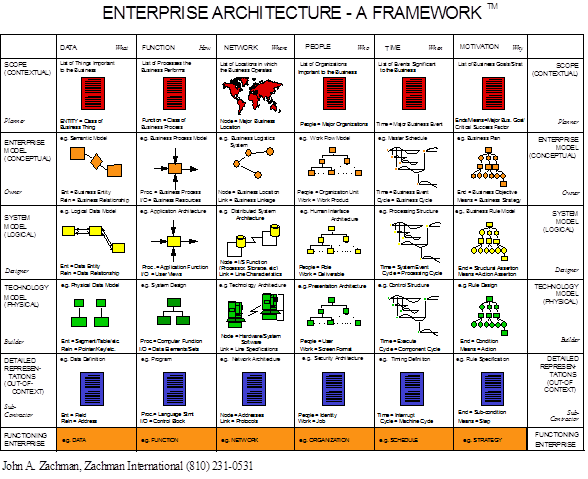

The Zachman Framework for EA

The scope of the EA, in our view, is best illustrated in the following Enterprise ArchitectureFramework as defined by John Zachman.

The scope of the EA, in our view, is best illustrated in the following Enterprise ArchitectureFramework as defined by John Zachman.

The first three rows define the implementation independent business architecture, progressing from a contextual high level business view through to a detailed specification of the business processes required to support that view. The business architecture is defined in terms of the six interrogative columns:

- Data architecture (What)

- Functions and process architecture (How)

- Locations/Networks configuration (Where)

- Organization structure (Who)

- Business events (When)

- Business strategies, visions, goals and rules (Why)

The intersection of a row and column is a cell (one of the squares) which contains artifacts or objects relevant to that cell, e.g. row 3 column 2 contains all the business process objects

Relationships are maintained:

- vertically representing the transformation from one level to another.

- horizontally representing relationships between artifacts on the same level. e.g. process to data, event to process etc.

The fourth through sixth rows define the technology and the applications in the current IS environment, where the columns contain IS type artifacts corresponding to the six interrogatives as above. Synchronization of the EA environment and the application development environment is implied by vertical relationships between business processes (row three) and technical processes (row four).

Enterprise Architecture-based (EA) Approach

The typical EA Engagement will consist of a number of major tasks, all aimed at:

- aligning the Enterprise’s IT Investments with Business Process and Mission needs

- creating the capability to sustain and absorb ever-increasing rates of human, business and technology change.

Our approach emphasizes Organizational Change Management because the architected approach that we introduce is normally quite new to many corporations, and great care must be taken to ensure organization adoption: this new way of thinking about the enterprise’s assets needs to be woven very carefully into the fabric of the organization.

The major tasks involved in a typical EA Engagement include the following:

- Program Management

- Process Architecture

- Data Architecture

- Application Architecture

- Technology Architecture

- EA Infrastructure

- EA Governance

- EA Communications

- Metadata Repository

Gavroshe has dedicated Principal Consultants and Senior Consultants who have deep skills and years of experience in each of the above categories of work. We configure exceptionally strong teams with seniority levels matching the strategic importance of your project. Our teams work seamlessly with our clients’ internal staff to achieve the following objectives:

- Alignment-building amongst your senior and executive management

- Engaging the business and harnessing key sponsors into the process

- Showcasing the EA work products to the rest of the organization

- Ensuring that your company’s key resources take ownership of the EA process.

Skill and Knowledge Transfer

Our engagements are underpinned by a formal Skills and Knowledge Transfer Program. Early in the engagement we will jointly identify mentor-protégé pairs and, for each matched pair, a Skills and Knowledge Transfer Contract will be established. Each mentoring agreement will contain clearly defined objectives for both mentor and protégé and will include components of formal training, on-the-job training/mentorship, and practical tasks. Both mentor and protégé will be measured periodically against these contracts to ensure that appropriate Skills and Knowledge Transfer is taking place.

Data Warehouse 2.0

In the never-ending quest to access any information, anywhere, anytime, an architecture is needed that includes data from both internal and external sources in a variety of formats. It must include operational data, historical data, legacy data, subscription databases, unstructured text, and data from Internet Service Providers. It must also include easily accessible metadata.

Today’s businesses require the ability to access and combine data from a variety of data stores, perform complex data analysis across these data stores, and create multidimensional views of data that represent the business analyst’s perspective of the data. Furthermore, there is a need to summarize, drill down, roll up, slice and dice the information across subject areas and business dimensions.

Information Systems must support at least four levels of analytical processing in today’s information driven organizations:

- Simple queries and reports against current and historical data

- Ability to do “what if” processing across dimensions of data

- In-Depth analysis of data using Data Mining and Statistical Analysis Tools

- Big Data Analytics

[/accordion][accordion title=”Big Data Analytics”]

Gavroshe approaches the topic of Big Data Analytics from a Use Case perspective, helping our client rapidly identify opportunities that will support and drive innovation using big data and analytical insights.

Big data is an area of diversity, so it is important to understand where an organization can benefit most from analytical activities that require big data from the following categories:

- Sensor / Log (high velocity, volume, streaming)

- Semi-Structured (medium velocity, volume), also known as Unstructured

- Digital Audio (high volume)

- Digital Image (high volume)

- Geo-Spatial (high velocity, volume)

Technologies that enable data acquisition, management, and analytics vary in the big data domain so ensuring that the technologies and approaches to analytics chosen are optimized to type of analytics sought is critical to avoiding costly mis-steps.

Our Big Data Analytics solution addresses the following areas:

- Big data category rationalization to inform and frame technology and integration architecture decisions

- Strategy & Governance for Big Data Analytics

- Use Case Identification and Profiling to develop a solid understanding of “requirements to enable” the analytics necessary

- Establishment of a solid, iterative “research to production” cycle to ensure expeditious enablement of the Big Data Analytics capability

- Development of Big Data Analytics Solution Architecture and identification of appropriate tools and technologies to enable rapid prototyping

- Big Data Analytics thought-leadership to work with the business and IT teams to accelerate the production of meaningful outcomes early in the POC process

For more information on how Gavroshe can apply this engagement to your Big Data Analytics opportunity contact us.

[/accordion][accordion title=”Data Scientist”]

The Data Scientist is one of the hottest new roles in driving Big Data Analytics. Gavroshe has an expanding pool of expertise in this critical new area. Following is a summary of the type of expertise we offer in this category:

- Strategic thinking about the uses of Big Data and how data use interacts with the exiting Enterprise Data Warehouse and data model designs, including data studies and data discovery around new data sources or new uses for existing data sources

- Design and implementation of processes for complex, large-scale data sets used for modeling, data mining, and research techniques

- Design and construction of large and complex structured and unstructured data sets from both internal and external sources

- Design and implementation of statistical data quality procedures around big data sources

- Identification and implementation statistical analysis tools required for accessing and analyzing

- Performance of statistical analyses with existing relational data sets such as MS SQL, MySQL, Oracle, DB2, and Teradata

- Support for implementing Data Visualization and reporting solutions

If you have a need in this area contact an Account Executive for more information.

[/accordion][accordion title=”Cloud Infrastructure Architecture”]

Gavroshe helps clients predictably realize the business benefits achievable with IT Technology Infrastructure As a Service using a pragmatic capabilities-based approach. It’s possible to accomplish significant gains in organizational agility and cost-effectiveness through adoption of a cloud infrastructure services framework, but doing so requires programmatic and organized methods. Whether you are seeking public or private cloud solutions, our Cloud-Optimized Infrastructure Architecture expertise help your team position your company to capitalize on the significant scaling and cost reduction opportunities afforded when the cloud done right.

To learn more about how we can help your team win in the race for the clouds contact us.[/accordion][accordion title=”Strategic Staff Augmentation”]

In every category of expertise found on our web-site Gavroshe has seasoned Sr. Consultants that can be engaged on a “Strategic” staff augmentation (time and materials) basis. This includes engagement on a fractional to full-time basis as necessary to help achieve your objective. Engagement can include the use of proven Gavroshe Intellectual Property Accelerators (IPAs), such as methodologies, process documentation, role descriptions, project planning templates, playbooks and the like if necessary, and pricing for services and IP can be customized to reflect the same.

Please contact an account executive to learn more about this offering.

[/accordion][accordion title=”Database Performance Tuning”]

- Oracle

- Exadata

- Teradata

- Netezza

- Green Plum

- MS SQL Server

- Parallel Data Warehouse

[/accordion][/accordions]